The book contains an initial set of exercises for Chapters 3, 4, and 5 (Part II: Ways of Using AI in Games). Below we provide further details about these exercises and propose additional exercises for the interested reader. The list of exercises will be constantly updated and populated to follow the current trends in game artificial intelligence. If you have suggestions for exercises we should include in the following list please contact us via email at gameaibook [ at ] gmail [ dot ] com.

Chapter 3: Playing Games

Ms Pac-Man Agent

The Ms Pac-man vs Ghost Team competition is a contest held at several artificial intelligence and machine learning conferences around the world in which AI controllers for Ms Pac-man and the Ghost-team compete for the highest ranking. For this exercise, you will have to develop a number of Ms Pac-Man AI players to compete against the ghost team controllers included in the software package. This is a simulator entirely written in Java with a well-documented interface. While the selection of two to three different agents within a semester period has shown to be a good educational practice we will leave the final number of Ms Pac-Man agents to be developed for you (or your class instructor) to decide.

Our proposed exercise is as follows

- Download the Ms Pac-Man Java-based framework. (NB. Use a Java IDE like Eclipse/Netbeans to open the framework and run the main class “Executor.java”)

- Use two of AI methods covered in Chapter 2 of the book to implement two different Ms Pac-Man agents.

- Hint: You may wish to start from methods with available java templates such as Monte Carlo Tree Search, Multi-Layer Perceptron, Genetic Algorithm, and Q-learning and then proceed to try out hybrid algorithms such as neuroevolution. Remember to visit the discussion about representation and utility at the beginning of Chapter 2 and the Ms Pac-Man examples for each algorithm covered in that chapter.

- Design a performance measure and compare the performance of the two algorithms

- Hint: What is good performance in Ms Pac-Man? Maximising the score, completing levels, a mix of the two? Try out different measures of performance and see how your algorithms compare.

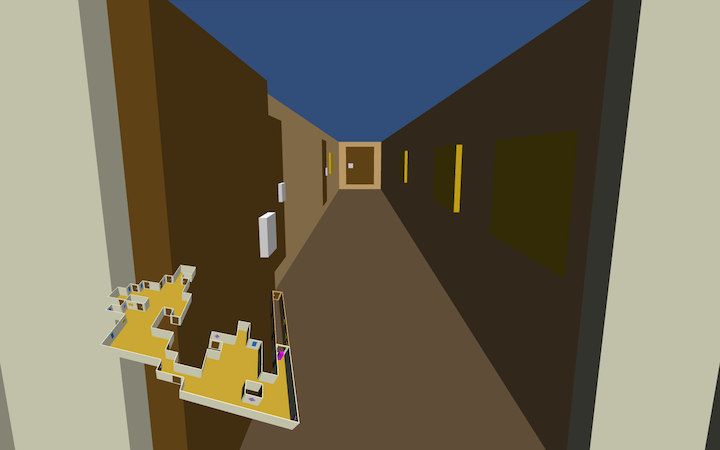

MiniDungeons Agent

MiniDungeons is a simple turn-based roguelike puzzle game, implemented by Holmgard, Liapis, Togelius and Yannakakis as a benchmark problem for modeling decision-making styles of human players. In every MiniDungeon level, the hero (controlled by the player) starts at the level’s entrance and must navigate to the level exit while collecting treasures, killing monsters and drinking healing potions. For this exercise, you will have to develop a number of AI players able to complete all the dungeons in the MiniDungeons simulator. The repository provided by Antonios Liapis is entirely written in Java and provides a barebones, ASCII version of the full MiniDungeons game which is straightforward to use and fast to simulate.

Our proposed exercise is as follows:

- Download the MiniDungeons Java-based framework. Use a Java IDE like Eclipse/Netbeans to load the sources and add the libraries.

- The easiest way to test the project is to use the three main classes in the experiment package.

- SimulationMode performs a number of simulations of a specific agent on one or more dungeons and reports the outcomes as metrics and heatmaps of each playthrough; these reports are also saved in a folder specified in the outputFolder variable.

- DebugMode tests one simulation on one map, step by step, allowing users to see what the agent does in each action, showing the ASCII map and the number of the hero’s current HP. Additional debug information could also be included in this view as needed.

- CompetitionMode is intended for testing how each agent specified in the controllerNames array fares against every other in a number of metrics such as treasures collected, monsters killed etc. This mode is intended for conference or classroom competitions where agents created by different users compete on one or more dimensions monitored by the system.

- Use two AI methods covered in Chapter 2 of the book to implement two different MiniDungeon agents.

- Hint: You may wish to start from methods with available java templates such as Best First Search, Monte Carlo Tree Search and Q-learning and then proceed to try out hybrid algorithms such as evolving neural networks. Implementation examples of Q-learning and neuroevolution for MiniDungeons are described here and here respectively. Remember to visit the discussion about representation and utility at the beginning of Chapter 2.

- Compare the performance of your agents in SimulationMode, or perform a competition with all agents in the class through CompetitionMode.

- Hint: Both SimulationMode and CompetitionMode output a broad range of metrics. Reflect on what is good performance for your MiniDungeons agents, when comparing between your agents: kill more monsters, collect all the treasure, or survive to reach the exit? These are different decision-making priorities, which have also been explored in the research on Minidungeons into procedural personas.

Chapter 4: Generating Content

Maze Generation

Maze generation is a very popular type of level generation and relevant for several game genres. In the first exercise we recommend that you develop a maze generator using both a constructive and a search-based procedural content generation (PCG) approaches and compare their performance .

For our proposed exercise you are requested to

- Go through the Unity 3D maze generation tutorial by Catlike Coding

- Apply a constructive PCG method–from the ones covered in the book (Section 4.3) or beyond–for the generation of mazes in Unity.

- Alternatively, apply a search-based PCG method (Section 4.3).

- Hint: How should you represent a maze? Directly as tiles or indirectly as rooms or corridors? What should a fitness function look like? The distance from start to exit, the complexity of the maze expressed as branching corridors, or some form of spatial navigation engagement function? Play with different fitness function designs, observe the generated mazes and discuss their differences.

- Evaluate the generators using one or more of the methods covered in Section 4.6 and compare their performance.

- Hint: Try out expressivity analysis as a first step in your evaluation. Then, if time allows, involve actual players and ask for their feedback.

Platformer Level Generation

The platformer level generation framework is based on the In finite Mario Bros (Persson, 2008) framework which has been used as the main framework of the Mario AI (and later Platformer AI) Competition since 2010. The competition featured several different tracks including gameplay, learning, Turing test and level generation.

As a proposed exercise on procedural content generation you are requested to download the level generation framework and

- Apply a constructive PCG method–from the ones covered in the book–for the generation of platformer levels (Section 4.3).

- Apply a generate-and-test PCG method–from the ones covered in the book–for the same purpose (Section 4.3).

- Evaluate the generators using one or more of the methods covered in Section 4.6.

- Design a performance measure and compare the algorithms’ performance.

Dungeon Generation

This repository made by Antonios Liapis contains algorithms for digger agents and cellular automata which generate dungeons and caves. The algorithms included are presented in Chapter 4 of this book and are detailed in the Constructive Generation Methods for Dungeons and Levels chapter of the PCG book.

A proposed exercise for dungeon generation is as follows:

- Go through the tutorial and download the different constructive methods contained in the repository.

- Implement a solver-based method (see Section 4.3.2) that generates dungeons.

- Hint: The key questions you should consider and experiment with are as follows: Which constraints are appropriate for dungeon generation? Which parameters best describe (represent) the dungeon?

- Once you design a performance measure, compare the performance of the solver-based generator against the digger agents and the cellular automata.

Chapter 5: Modeling Players

Here is a set of proposed exercises for modeling both the behavior and the experience of game players.

SteamSpy

SteamSpy is a rich dataset of thousand games released on Steam containing several attributes each. While strictly not a dataset about player modeling, SteamSpy offers an accessible and large dataset for game analytics. The data attributes of each game include the game’s name, the developer, the publisher, the score rank of the game based on user reviews, the number of owners of the game on Steam, the people that have played this game since 2009, the people that have played this game in the last 2 weeks, the average and median playtime, the game’s price and the game’s tags.

We propose the following exercise using the SteamSpy data

- Use an SteamSpy API to download all data attributes from a number of games contained in the dataset.

- Implement two unsupervised learning algorithms (Chapter 2) to the chosen set of games in order to retrieve clusters within the space of games.

- Hint: some attributes are better than others in yielding clusters of games (and their analytics). Can you find which? Would mining of sequences help in this process?

- Choose a performance measure and compare the algorithms’ performance.

StarCraft: Brood War

The StarCraft: Brood War repository contains a number of datasets that include thousands of professional StarCraft replays. The various data mining papers, datasets as well as replay websites, crawlers, packages and analyzers have been solicited from Alberto Uriarte from Drexel University and are available here. In this exercise you are faced with the challenge of mining game replays with the aim to predict a player’s strategy.

A possible game AI course exercise for StarCraft is as follows

- Download a dataset from the available at the StarCraft: Brood War Data Mining repository

- Implement two supervised learning algorithms in order to predict the player’s strategy based on a set of selected attributes. You can select from the algorithmic families of neural networks, decision trees and support vector machines, as covered in Chapter 2.

- Hint: Feature selection might be important as we don’t really know which attributes are relevant for predicting a player’s plan. Some initial results on this dataset can be found in this paper by Weber and Mateas.

Platformer Experience Dataset

The extensive analysis of player experience in Super Mario Bros (Nintendo, 1985) and our wish to further advance our knowledge and understanding on player experience had lead to the Platformer Experience Dataset. This is the first open-access game experience corpus that contains multiple modalities of data from Super Mario Bros (Nintendo, 1985) players. The open-access database can be used to capture aspects of player experience based on behavioral and visual recordings of platform game players. In addition, the database contains aspects of the game context| such as level attributes, demographical data of the players and self-reported annotations of experience in two forms: ratings and ranks. Towards building the most accurate player experience models here is a number of questions you might wish to consider. Which AI methods should I use? How should I treat my output values? Which feature extraction and selection mechanism should I consider?

As a semester project on player experience modeling you are requested to

- Read carefully the description of the Platformer Experience Dataset and download the corpus.

- Choose one or more of the affective or cognitive states that are available to model (the output of the model).

- Use two different supervised learning algorithms to build player experience models that predict both the ratings and the rank labels of experience

- Hint: Note that both ratings and ranks are ordinal data by nature. Also note that not all input attributes might be relevant for the modeling task. As an initial step, maybe consider only the behavioral data of the dataset as the input to your model. Then, consider adding the attributes that are extracted from the visual cues of the players.

- Hint: The Preference Learning Toolbox might be of help in your efforts.

- Choose a performance measure and compare the performance of the algorithms on predicting experience ratings and ranks.

Maze-Ball Dataset

The Maze-Ball dataset is a publicly available game experience corpus (released by Hector P. Martinez) that contains two modalities of data obtained from Maze-Ball players: their gameplay attributes and three physiological signals: blood volume pulse, heart rate and skin conductance. In addition, the database contains aspects of the game such as features of the virtual camera placement. Finally the dataset contains demographical data of the players and self-reported annotations of experience in two forms: ratings and ranks.

As with the Platformer Experience Dataset your aim, once again, is to construct the most accurate models of experience for the players of Maze-Ball. So, which modalities of input will you consider? Which annotations are more reliable for predicting player experience? How will your signals be processed? These are only a few of the possible questions you will encounter during your efforts.

A possible course project on the Maze-Ball dataset is similar to the Platformer Experience Dataset above:

- Download the Maze-Ball dataset

- Choose one or more of the affective or cognitive states that are available to model (the output of the model).

- Use two different supervised learning algorithms to build player experience models that predict both the ratings and the rank labels of experience

- Hint: Note that both ratings and ranks are ordinal data by nature. Also note that not all input attributes might be relevant for the modeling task. As an initial step, maybe consider only the behavioral data of the dataset as the input to your model. Then, consider features you could extract from the three physiological signals available in the dataset.

- Choose a performance measure and compare the performance of the algorithms on predicting experience ratings and ranks.